What is Edge computing?

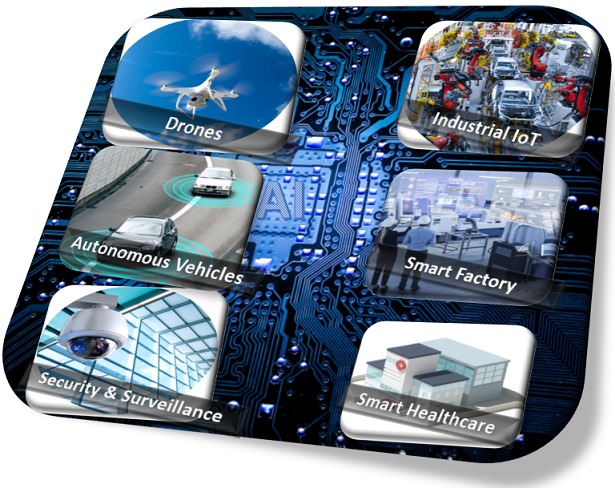

Edge computing brings computation closer to the consumers of data and sources, such as devices, sensors, and users, instead of in centralized servers or clouds. By performing computation at the network's edge, edge computing can improve response times, save bandwidth, and enhance reliability. Edge computing is beneficial for applications that require real-time processing, low latency, and high reliability, such as drones, autonomous vehicles, smart cities, healthcare, surveillance, smart factories, augmented reality, and the Internet of Things (IoT).

Figure 1: Edge AI use cases

In this blog post, we will explore some of the most promising edge computing innovations that are shaping the future of technology.

Federated learning: a technique that enables distributed devices to collaboratively learn a shared machine learning model without sending raw data to a central server, preserving privacy and reducing communication costs.

Edge AI: refers to the deployment of artificial intelligence models on edge devices, such as smartphones, cameras, and sensors. Edge AI enables faster and more efficient inference and decision-making by avoiding sending data to a cloud server or relying on network connectivity.

Edge cloud: a concept that extends the cloud computing model to the network's edge, providing scalable and elastic resources for edge applications and services.

Edge security: a challenge that involves protecting the data and devices at the edge from cyberattacks, malicious insiders, and physical threats using techniques such as encryption, authentication, and blockchain.

Federated Learning Benefits and Challenges

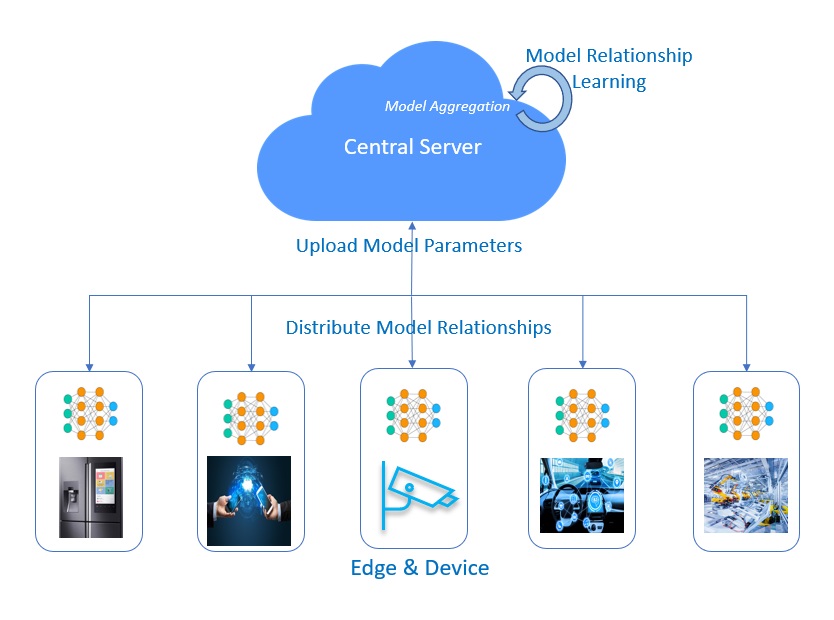

Federated learning is a technique that allows multiple devices to collaborate on training a machine learning model without sharing their data. Each device has a local dataset and a local model, updated using their data. The devices communicate with a central server that coordinates learning by sending and receiving model parameters. The server aggregates the parameters from different devices using a weighted average or another algorithm and broadcasts the updated global model to all devices. The devices then use the global model to start their next local update. This process repeats until the model converges or meets a predefined criterion.

Figure 2: Federated learning enables distributed devices to learn a shared machine learning model collaboratively without sending raw data to a central server.

Benefits:

Federated learning preserves the privacy of the data owners by avoiding sending raw data to a central server. Instead, only model updates are exchanged, which can be further encrypted or anonymized.

Reduces communication costs by avoiding transferring large amounts of data over the network. Instead, only model updates are exchanged, typically much smaller than raw data.

Leveraging each device's local data and computation power enables faster and more accurate learning. Instead of relying on a single server that may have limited resources or outdated data, federated learning distributes the learning process across multiple devices that have access to new and diverse data.

Some of the challenges and limitations of federated learning include the following:

Federal learning requires coordination and synchronization among multiple devices with different hardware capabilities, network conditions, and availability.

The data may suffer from statistical heterogeneity and non-IIDness across different devices.

It may face security and privacy threats from malicious or compromised devices that send false or corrupted model updates to the server or other devices.

How does Edge AI work, and what are the benefits and challenges it faces?

Edge AI can be applied to applications requiring real-time processing, low latency, and high reliability, such as autonomous vehicles, smart cities, and the Internet of Things (IoT).

Edge AI works as follows: an edge device holds an AI model trained offline using cloud resources or federated learning. The device then uses its local sensors or cameras to collect data and feed it to the AI model. It then performs inference or prediction using the AI model and takes action accordingly, and it may also send feedback or updates to the cloud server or other devices to improve its AI model.

Edge AI has many benefits and applications:

Improving latency and bandwidth: Edge AI can reduce the delay and cost of sending data to and from the cloud, improving the performance and efficiency of AI applications that require real-time or near-real-time responses, such as autonomous vehicles, smart cameras, drones, and robots.

Enhancing privacy and security: Edge AI can enable data processing and analysis on the device without exposing sensitive or personal information to third parties or potential hackers. This can also help comply with data regulations and policies restricting data transfer across borders or domains.

Enabling scalability and reliability: Edge AI can distribute the workload among multiple devices and nodes, increasing the scalability and reliability of AI applications that handle large volumes or varieties of data, such as IoT sensors, smart grids, and smart cities.

However, Edge AI also poses some challenges and limitations, such as:

Constrained resources: Edge devices typically have limited memory, storage, battery life, processing power, and connectivity compared to cloud servers. This can limit the complexity and accuracy of AI models that run on them and the amount and quality of data that can be collected and processed.

Heterogeneous environments: Edge devices vary widely regarding hardware specifications, software platforms, operating systems, and network protocols. This can make developing and deploying consistent and compatible AI solutions difficult for different devices and scenarios.

Distributed management: Edge devices are often geographically dispersed and dynamically connected, which can complicate managing and coordinating AI applications involving multiple devices and nodes.

Edge Cloud: Extends the cloud computing model to the network's edge

Edge cloud: a concept that extends the cloud computing model to the network's edge, providing scalable and elastic resources for edge applications and services. Edge cloud is a cloud service that leverages edge computing to provide end users with low-latency, high-bandwidth, and scalable applications. Edge cloud can be seen as an extension of the traditional cloud, but with distributed and decentralized resources closer to the edge.

Edge cloud enables faster data processing, lower latency, and improved user experience for applications that require real-time or near-real-time interactions. Edge cloud can also reduce bandwidth costs and network congestion by minimizing the amount of data that needs to be transferred to and from the central cloud.

Edge security: Secures Data and Devices at the Edge of the Network

Edge security refers to the protection of data and devices at the edge of a network, where they are closer to the end-users and the sources of data generation. Edge security has several benefits, such as reducing latency, bandwidth consumption, and network congestion and enables real-time analysis and decision-making. However, edge security poses challenges, such as increasing the attack surface, requiring more distributed and decentralized management, and ensuring compliance with data privacy and sovereignty regulations. Therefore, edge security requires a holistic and adaptive approach that leverages encryption, authentication, authorization, firewall, intrusion detection and prevention, and anomaly detection.

Secure Access Service Edge (SASE) is a term introduced by Gartner in 2019 to describe a cloud-based network architecture that integrates WAN capabilities with cloud-native security functions. SASE delivers security and WAN services directly to the source of connection, such as users, devices, IoT devices, or edge computing locations.

Skills for a job in edge computing

Some of the skills that employers look for in edge computing professionals today:

Programming languages: Proficient in at least one of the popular programming languages for edge computing, such as Python, C#, Java, C++, Go, or Rust. You should also be familiar with frameworks and libraries that support edge computing, such as TensorFlow Lite, PyTorch Mobile, or OpenCV.

Networking: Understanding of networking protocols and concepts, such as TCP/IP, UDP, MQTT, HTTP, and REST. You should also be able to configure and troubleshoot network devices and services, such as routers, switches, firewalls, and VPNs.

Cloud computing: Experience with cloud computing platforms and services, such as AWS, Azure, or Google Cloud. You should be able to use cloud services to manage and monitor edge devices and applications and store and analyze data from the edge.

Security: Know the security challenges and risks associated with edge computing, such as data breaches, cyberattacks, and privacy violations. You should be able to implement security measures and best practices to protect edge devices and data, such as encryption, authentication, and authorization.

IoT: Knowledge of IoT devices and sensors commonly used in edge computing scenarios. You should be able to connect and communicate with IoT devices using various protocols and standards.

Machine learning: Familiarity with machine learning concepts and techniques relevant to edge computing, such as deep learning, computer vision, or natural language processing. You should be able to train and deploy machine learning models on edge devices.

Conclusion

Edge computing is an emerging and exciting field that has the potential to transform various industries and domains by bringing intelligence closer to where it matters and enabling new possibilities and opportunities for innovation and value creation.

References:

- TensorFlow Lite is a mobile library for deploying models on mobile, microcontrollers and other edge devices.

- PyTorch Mobile is in beta stage right now, and is already in wide scale production use.

- ONNX Runtime is a cross-platform machine-learning model accelerator, with a flexible interface to integrate hardware-specific libraries. ONNX Runtime can be used with models from PyTorch, Tensorflow/Keras, TFLite, scikit-learn, and other frameworks.

- AWS IoT Greengrass

- Azure IoT Edge

- Google Cloud IoT offers a host of partner-led solutions

- MQTT: The Standard for IoT Messaging

- The LoRaWAN protocol is a Low Power Wide Area Networking (LPWAN) communication protocol that functions on LoRa.

- Journal of Cloud Computing

- ScienceDirect

- Semantic Scholar

- Gartner Blog: Say Hello to SASE (Secure Access Service Edge)